Table of contents

Hello World! 🌎 I hope everyone is doing well and learning new things.

When you are running an EKS cluster, you may have encountered some situations where you wanted to authorize your PODs to access AWS services. For Ex - Read/Write a file to/from S3, Trigger lambda functions, etc.

So How do you do it?

There are multiple ways to do it -

In this blog, we will be focusing on point #3

Out of Curiosity, Do you know what access your Pods get by default in an EKS Cluster?

I assume you don't know, so let's check :

First of all, Create a pod that has AWS CLI Installed so you can run the aws sts get-caller-identity command. For that apply below YAML file :

apiVersion: v1

kind: Pod

metadata:

name: aws-cli

spec:

containers:

- image: amazon/aws-cli:latest

name: aws-cli

command: ["sleep", "36000"]

kubectl apply -f aws-cli-pod.yml

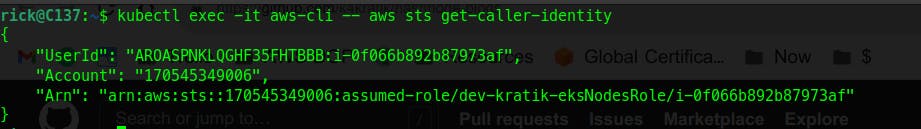

Now, let's exec into that pod and run the aws cli command to know what IAM identity is currently logged in.

kubectl exec -it aws-cli -- aws sts get-caller-identity

Oh Okay! Do you know what role this is?

Let's observe the UserId

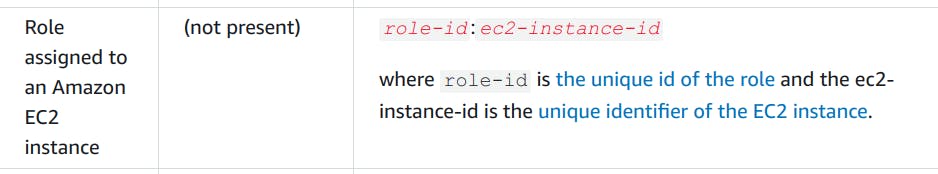

According to official AWS Documentation here :

This means this is a role assigned to an EC2. Digging it further (documentation reference), I got to know that the first part in the UserId (AROASPNKLQGHF35FHTBBB:i-0f066b892b87973af) is a unique identifier for a role. AROA represents that this particular identity is a role.

Ok, So the next question, From where this role is getting assigned and being assumed?

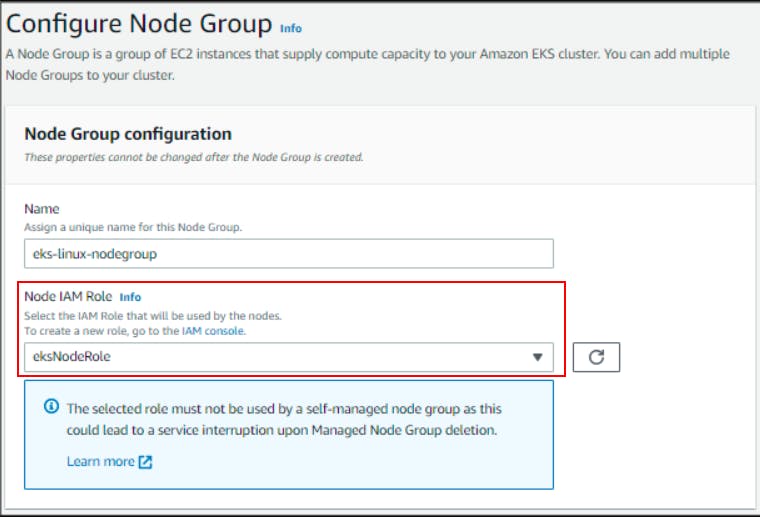

Answer - When we create EKS cluster/Node Groups, either using CloudFormation, Terraform, or AWS Console, we have to assign a role to the EKS Worker Nodes, and as our Pods run on those nodes they inherit(or assume) those permissions of that role, which assigned to EKS Worker nodes.

AWS CloudFormation Reference

AWS Console Reference (check image below)

Terraform Reference

You can check more about the purpose of the EKS Node Role here.

Now as we saw earlier, by default, every pod will assume the IAM role which is assigned to the node, on which the pod is running.

Coming back to the point, I can see a way now using which any Pod can have required IAM access: I just need to add those policies to the EKS Node IAM Role, right?

But wait, it can work for you but NOT A GOOD PRACTICE!!! (All of your pods will be getting that access, even though they don't need that access. [ ⚠️ Violation of the Principle of least privilege])

🤔 Then what should we do now to assign pod a specific role and we won't have to mess with the IAM role of EKS Nodes?

Service Account for the Rescue 😉

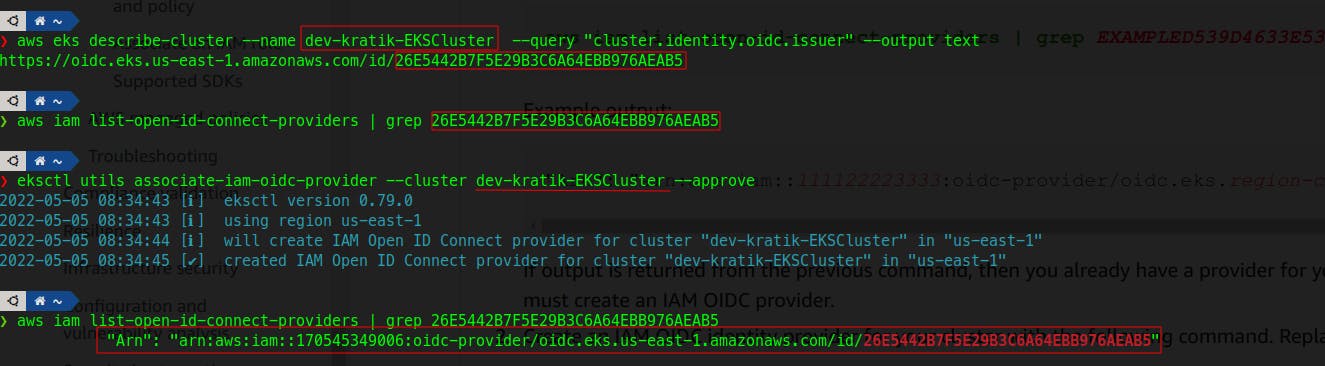

1. Create an OIDC provider for your EKS cluster

I am using eksctl(link) utility here for managing and performing operations on my EKS Cluster.

eksctl utils associate-iam-oidc-provider --cluster NAME_OF_YOUR_CLUSTER --approve

Sidenote - also check this AWS article which also includes one more step to check for existing OIDC Issuer for your cluster.

*Open image in New Tab if not clear!

2. Create the role you want to attach to your Pod

2.1 Create the JSON policy document

IAM-Policy-for-listing-buckets.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListAllMyBuckets"

],

"Resource": [

"*"

]

}

]

}

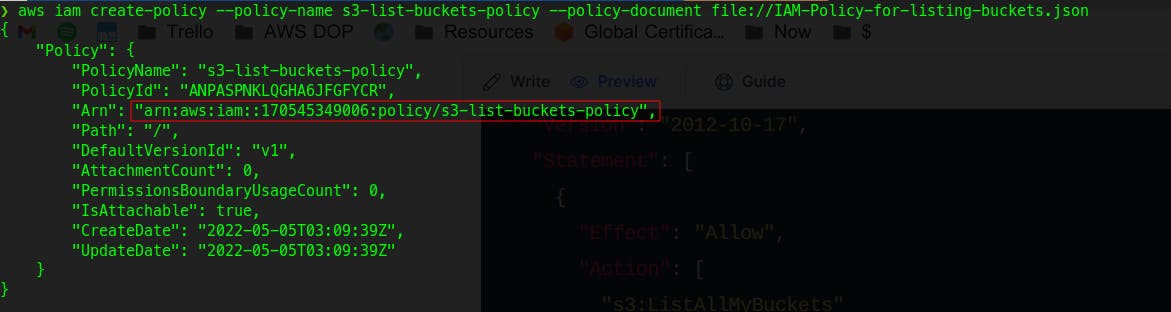

2.2 Create the IAM policy

Now fire up the AWS CLI Command to create your policy :

aws iam create-policy --policy-name s3-list-buckets-policy --policy-document file://IAM-Policy-for-listing-buckets.json

You will see something like below output :

Copy the ARN of this new policy here.

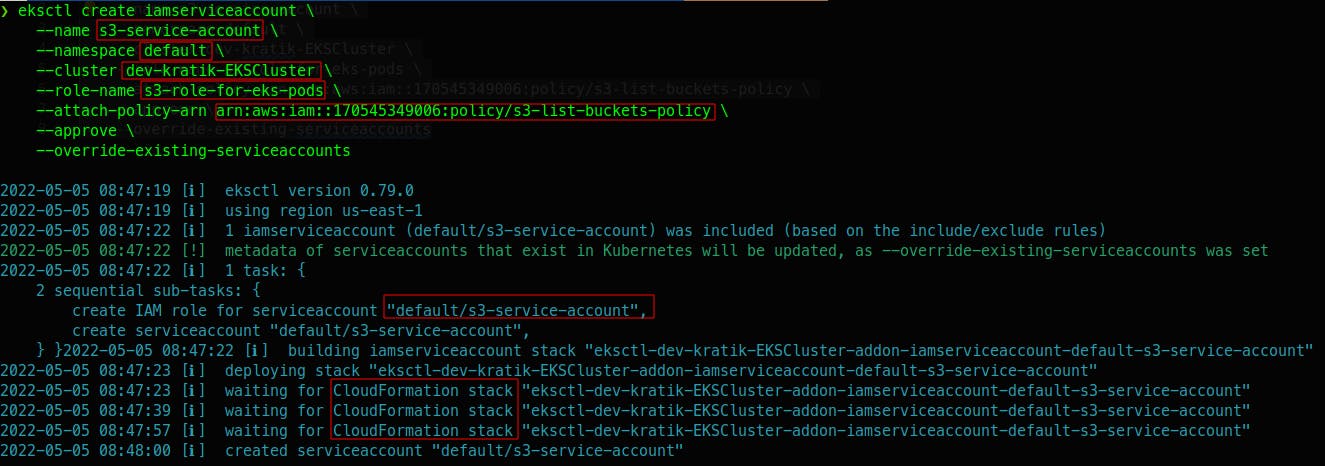

2.3 Create an IAM role for a service account

let's run eksctl command as below and create IAM role and service account

eksctl create iamserviceaccount \

--name NAME_OF_YOUR_SERVICE_ACCOUNT \

--namespace NAME_OF_YOUR_K8S_NAMESPACE \

--cluster NAME_OF_EKS_CLUSTER \

--role-name NAME_OF_YOUR_IAM_ROLE \

--attach-policy-arn ARN_OF_POLICY_YOU_JUST_CREATED \

--approve \

--override-existing-serviceaccounts

Output

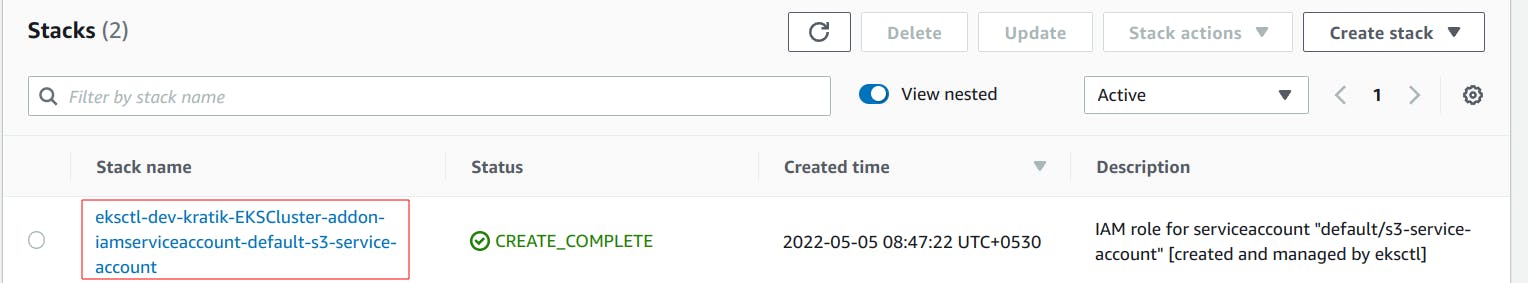

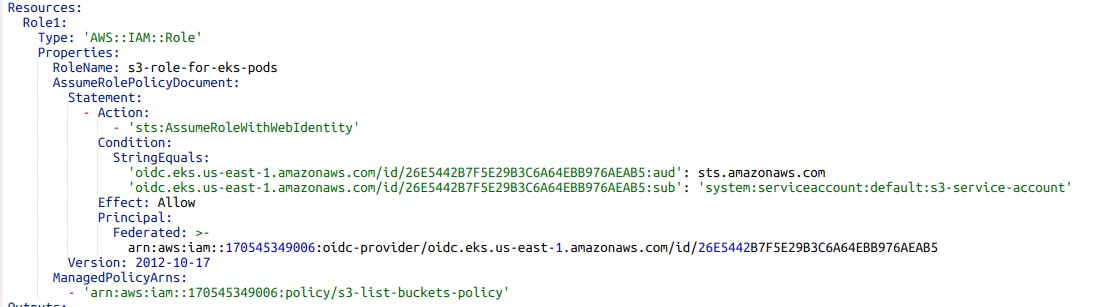

This will create a CloudFormation Stack (which will create an IAM role) and our service account which we defined in the command args.

I encourage you all to go and check the above CF template, check how the ROLE was created, and most importantly: How the role's AssumeRolePolicyDocument is defined.

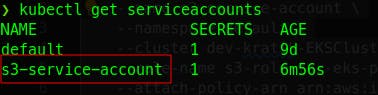

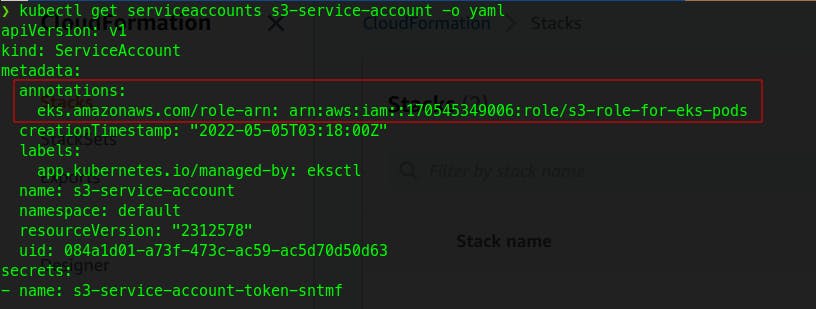

To check what happened in our EKS Cluster :

kubectl get serviceaccounts

kubectl get serviceaccounts s3-service-account -o yaml

3. Associate the service account with your Pod so it can have access to your Role

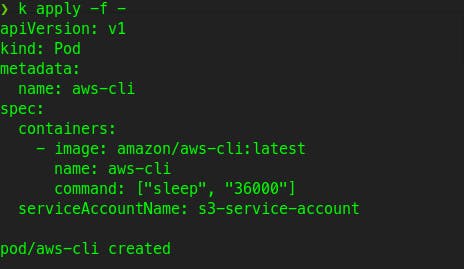

Do you remember, at the start of our blog, we created an aws-cli pod, lets tweak it a bit and see what happens now :

delete the old pod

kubectl delete po aws-cli

Update the file aws-cli-pod.yml as follows :

apiVersion: v1

kind: Pod

metadata:

name: aws-cli

spec:

containers:

- image: amazon/aws-cli:latest

name: aws-cli

command: ["sleep", "36000"]

serviceAccountName: s3-service-account

kubectl apply -f aws-cli-pod.yml

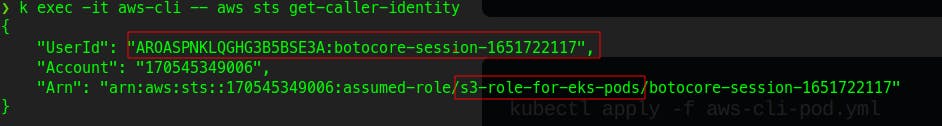

Now check the current logged in IAM entity in our POD :

Wait, this time it is different, Also our new role is there and it is being assumed by our Pod.

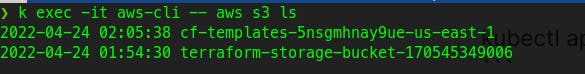

Let's verify the access by listing out all S3 buckets :

Woohoo! That works!!!

wait let me add more excitement

What do you think about this whole IAM & Service Account thing? Did you learn something new?

Let me know in the comments. Thanks for reading.